MOOC List is learner-supported. When you buy through links on our site, we may earn an affiliate commission.

MOOC List is learner-supported. When you buy through links on our site, we may earn an affiliate commission.

The theory, skills and techniques learned in this course have applications to AI and machine learning. In these popular fields, often the driving engine behind the systems that are interpreting, training, and using external data is exactly the matrix analysis arising from the content in this course.

Successful completion of this specialization will prepare students to take advanced courses in data science, AI, and mathematics.

This course is part of the Linear Algebra from Elementary to Advanced Specialization.

Syllabus

Orthogonality

In this module, we define a new operation on vectors called the dot product. This operation is a function that returns a scalar related to the angle between the vectors, distance between vectors, and length of vectors. After working through the theory and examples, we hone in on both unit (length one) and orthogonal (perpendicular) vectors. These special vectors will be pivotal in our course as we start to define linear transformations and special matrices that use only these vectors.

Orthogonal Projections and Least Squares Problems

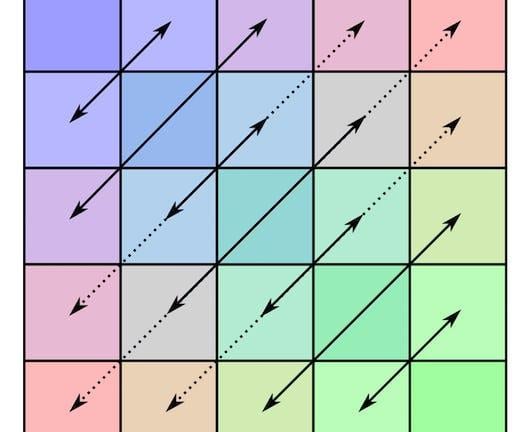

In this module we will study the special type of transformation called the orthogonal projection. We have already seen the formula for the orthogonal projection onto a line so now we generalize the formula to the case of projection onto any subspace W. The formula will require basis vectors that are both orthogonal and normalize and we show, using the Gram-Schmidt Process, how to meet these requirements given any non-empty basis.

Symmetric Matrices and Quadratic Forms

In this module we look to diagonalize symmetric matrices. The symmetry displayed in the matrix A turns out to force a beautiful relationship between the eigenspaces. The corresponding eigenspaces turn out to be mutually orthogonal. After normalizing, these orthogonal eigenvectors give a very special basis of R^n with extremely useful applications to data science, machine learning, and image processing. We introduce the notion of quadratic forms, special functions of degree two on vectors , which use symmetric matrices in their definition. Quadratic forms are then completely classified based on the properties of their eigenvalues.

Final Assessment

MOOC List is learner-supported. When you buy through links on our site, we may earn an affiliate commission.

MOOC List is learner-supported. When you buy through links on our site, we may earn an affiliate commission.